SEMESTER SECOND

FINAL YEAR : BE

BRANCH ::COMPUTER / CS

Subject

:Deep Learning(Notes)

Machine Learning:

·

Machine learning is a subset of artificial

intelligence that allows systems to automatically learn and improve from

experience without being explicitly programmed.

·

In other words, it enables computer

programs to learn from data, recognize patterns, and make predictions or

decisions based on the learned patterns.

Machine Learning Model Process:

The machine learning process typically involves

the following steps:

1.Data Collection: The first step in the machine learning process is to

collect relevant data from various sources, including databases, APIs, and

external sources.

2.Data Preprocessing: The collected data needs to be preprocessed to

ensure that it is in a suitable format for machine learning algorithms. This

may involve tasks such as data cleaning, data transformation, and feature

selection.

3.Data Splitting: The preprocessed data is then split into training,

validation, and testing sets. The training set is used to train the machine

learning model, the validation set is used to tune the model's hyperparameters,

and the testing set is used to evaluate the model's performance.

4.Model Selection: The next step is to select a suitable machine learning

model for the task at hand. This may involve choosing between different types

of models, such as decision trees, neural networks, or support vector machines.

5.Model Training: The selected machine learning model is then trained on

the training data set. During training, the model learns from the input data

and adjusts its parameters to improve its performance on the task.

6.Model Evaluation: Once the model is trained, it is evaluated on the

validation set to assess its performance. This step involves measuring various

metrics such as accuracy, precision, and recall.

7.Model Tuning: Based on the evaluation results, the model's

hyperparameters may be adjusted to improve its performance on the validation

set. This process may involve fine-tuning the model's architecture, regularization

techniques, or optimization algorithms.

8.Final Model Evaluation: The final step is to evaluate the model's

performance on the testing set. This step provides an unbiased estimate of the

model's performance on new, unseen data.

9.Model Deployment: If the model performs well on the testing set, it can

be deployed in a production environment to make predictions on new data. This

may involve integrating the model into an application or API that can be used

by end-users.

Advantages of machine learning:

1. Improved

accuracy: Machine learning algorithms can often achieve higher

accuracy than traditional rule-based methods.

2. Automation:

Machine learning can automate complex and repetitive tasks, saving time and

effort for humans.

3. Scalability:

Machine learning algorithms can be applied to large datasets and can be easily

scaled up to handle more data.

4. Personalization:

Machine learning algorithms can learn from user data to provide personalized

recommendations and experiences.

Disadvantages of machine learning:

1. Data

dependency: Machine learning algorithms require large

amounts of data to train effectively, and the quality of the output is highly

dependent on the quality of the input data.

2. Interpretability:

Machine learning models can be difficult to interpret, which can be a challenge

for understanding how decisions are made.

3. Bias:

Machine learning models can be biased towards certain groups or outcomes,

depending on the input data and how the algorithm is designed.

4. Vulnerability:

Machine learning models can be vulnerable to attacks, such as adversarial

attacks, which can cause the model to make incorrect predictions.

Difference Between Machine Learning and

Deep Learning

|

|

Machine Learning |

Deep Learning |

|

Architecture |

Machine learning

models typically have a simpler architecture |

deep learning

models, which are composed of multiple layers of interconnected nodes. |

|

Data size |

Machine learning

models are effective with small to medium-sized datasets |

while deep

learning models require large amounts of data to train effectively |

|

Feature

extraction |

Machine learning

requires humans to manually extract features from data |

deep learning

models can automatically learn features from raw data. |

|

Algorithm

complexity |

Machine learning

algorithms tend to be simpler and more interpretable |

deep learning

algorithms are more complex and less interpretable |

|

Domain expertise |

Machine learning

typically requires domain expertise to identify relevant features and select

appropriate algorithms |

deep learning

can learn relevant features automatically |

|

Training time |

Machine learning

models can be trained quickly |

deep learning

models require a lot of computational power and time to train |

Difference Between Supervised and Unsupervised

Learning

|

Supervised Learning |

Unsupervised

Learning |

|

In supervised

learning, the input data consists of labeled |

In unsupervised

learning, the input data consists of unlabeled |

|

In supervised

learning, the goal is to learn a function that maps input data to output data |

In unsupervised

learning, the goal is to find patterns and structure in the input data. |

|

In supervised

learning, the model receives feedback in the form of labels or error signals

that indicate how well it is performing on the task |

In unsupervised

learning, there is no explicit feedback, and the model must find its own

structure in the input data. |

|

Supervised

learning models require a large amount of labeled data to train effectively |

unsupervised

learning models can often learn from smaller amounts of unlabeled data |

|

Supervised

learning models are often more complex |

Unsupervised

learning models are less complex than supervised learning |

|

In supervised

learning, the model is typically evaluated on a held-out set of labeled data |

unsupervised

learning, the model's performance is often evaluated by how well it can

reproduce or transform the input data |

|

Examples of

supervised learning include image classification, speech recognition, and

language translation. |

Examples of

unsupervised learning include clustering, dimensionality reduction, and

anomaly detection. |

Bias And Variance

Bias and variance are two important concepts in

machine learning that describe the behavior of a model on different datasets.

Bias :

·

Bias refers to the difference between the

expected value of the predictions made by a model and the true values of the

target variable.

·

In other words, bias measures how much the

model's predictions deviate from the true values due to assumptions,

simplifications, or limitations in the modeling process

·

Example: Suppose you want to predict the

heights of children based on their age. If you assume that all children grow at

the same rate and use a linear model that only considers age as a feature, your

model will have high bias and will underestimate the heights of taller children

and overestimate the heights of shorter children.

Variance:

·

Variance refers to the variability of the

model's predictions on different datasets

·

In other words, variance measures how much

the model's predictions change when the training data is perturbed High

variance means that the model is too

complex and can fit the noise in the data instead of the underlying patterns

·

Example: Suppose you want to predict the

performance of a student on a test based on their study hours and sleep hours.

If you use a deep neural network with many layers and parameters, your model

will have high variance and will perform well on the training set but may

generalize poorly to new data. This is because the model is too flexible and

can memorize the training examples instead of learning the true relationship

between the features and the target.

To achieve good performance, you need to find a

balance between bias and variance by selecting an appropriate model complexity,

regularization techniques, and evaluation metrics. This can help you identify

the sweet spot where your model has low bias and low variance and can

generalize well to new data.

Bias Variance Tradeoffs

·

Bias refers to the error that is

introduced by approximating a real-world problem with a simplified model, while

variance refers to the amount that the model output varies as a result of

changing the input data.

·

A model with high bias tends to underfit

the training data, while a model with high variance tends to overfit the

training data

·

To achieve good generalization

performance, we need to find the right balance between bias and variance.

·

Increasing the complexity of the model can

reduce bias, but it may increase variance.

·

Decreasing the complexity of the model can

reduce variance, but it may increase bias.

·

The bias-variance trade-off can be

visualized as a "U-shaped" curve, where bias decreases and variance

increases as model complexity increases.

·

The optimal point is the point where the

model achieves the best trade-off between bias and variance, and performs best

on new, unseen data.

·

Finding the optimal point may involve

adjusting the model architecture, the training data, or adding regularization

techniques.

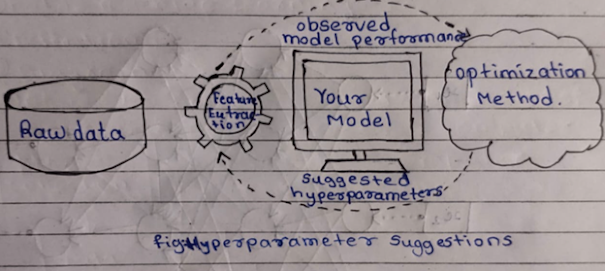

Hyperparameters:

·

Hyperparameters are parameters of a

machine learning algorithm that are set by the user, rather than learned from

the data.

·

They are often referred to as

"tuning" parameters because adjusting their values can affect the

performance of the model.

·

Hyperparameters can include things like

learning rates, regularization strengths, number of hidden layers, number of trees,

and kernel types.

·

Choosing the right hyperparameters is

important because they can make the difference between a good model and a great

one.

·

Techniques for selecting hyperparameters

include grid search, random search, and Bayesian optimization.

Examples of

hyperparameters include:

1. Learning

rate:

controls how much the weights of the model are adjusted in each iteration of

the training process.

2. Regularization

strength: controls the degree of regularization applied to

prevent overfitting.

3. Number

of hidden layers: affects the model's ability to capture

complex patterns in the data.

4. Kernel

type: determines the shape of the decision boundary in

support vector machines (SVMs).

5. Batch

size:

the number of training examples used in each iteration of the optimization

algorithm in deep learning..

Regularization is a

technique used in machine learning to prevent overfitting and improve the

generalization performance of the model. Overfitting occurs when the model

learns the noise or random fluctuations in the training data, leading to poor

performance on new, unseen data.

key points about

regularization:

·

Regularization involves adding a penalty

term to the loss function of the model, which discourages the model from

learning overly complex or sensitive relationships between the input and

output.

·

The penalty term can take different forms,

such as L1 or L2 regularization, which encourage sparsity or smoothness in the

weights of the model.

·

Regularization can be applied to a variety

of machine learning algorithms, including linear regression, logistic

regression, support vector machines, and neural networks.

·

The strength of the regularization term is

controlled by a hyperparameter, which is typically set using cross-validation

or other techniques.

·

The purpose of regularization is to

improve the generalization performance of the model, which means it is able to

perform well on new, unseen data.

·

Regularization can help prevent

overfitting by reducing the variance of the model, without increasing the bias.

·

Regularization can also help with feature

selection, by encouraging the model to focus on the most important features and

ignore noisy or irrelevant ones.

·

Regularization is a powerful tool for

improving the robustness and reliability of machine learning models, and is

widely used in practice.

Difference between Overfitting and

Underfitting

|

Overfitting |

Underfitting |

|

Underfitting

occurs when the model is too simple or constrained to capture the patterns in

the training data. |

Overfitting

occurs when the model is too complex or flexible and starts to memorize the

noise or random fluctuations in the training data. |

|

This can happen

when the model is not expressive enough (e.g., too few features, too few

hidden layers), or when the regularization strength is too high. |

This can happen

when the model is too expressive (e.g., too many features, too many hidden

layers), or when the regularization strength is too low. |

|

Underfitting can

lead to high bias and low variance, meaning the model is not able to capture

the important relationships between the input and output. |

Overfitting can

lead to low bias and high variance, meaning the model is too sensitive to the

training data and not able to generalize well to new, unseen data. |

|

The training and

validation errors are both high, indicating that the model is not able to

generalize well to new, unseen data. |

The training

error is low, but the validation error is high, indicating that the model is

overfitting to the training data. |

Limitations of Machine Learning

1. Dependence

on quality and quantity of data: Machine learning models

require large amounts of high-quality data to learn and make accurate

predictions. Limited or low-quality data can lead to inaccurate results.

2. Lack

of transparency: Some machine learning models are

considered "black boxes" because it's challenging to understand how

they arrive at their decisions, making it difficult to troubleshoot issues or

identify biases.

3. Bias

and discrimination: Machine learning models can perpetuate

biases and discrimination if the training data used is biased or if the

algorithm itself has an inherent bias.

4. Overfitting:

Overfitting occurs when a machine learning model becomes too complex and fits

the training data too closely. This can lead to poor performance on new data.

5. Limited

generalizability: Machine learning models may perform well

on the training data but struggle to generalize to new or unseen data.

6. Lack

of human intuition: Machine learning models cannot replicate

human intuition, which can be valuable in decision-making processes.

7. Security

risks: Machine learning models can be vulnerable to attacks,

including adversarial attacks, data poisoning, and model stealing.

8. Cost

and resource-intensive: Machine learning requires

significant computing resources and can be expensive to implement and maintain.

9. Interpretability:

Some machine learning models can be difficult to interpret, making it

challenging to identify the cause of errors or validate results.

10. Ethical

concerns: Machine learning can raise ethical concerns, such as

privacy violations, employment discrimination, and the potential for misuse.

History of Deep Learning

·

Neural Networks in the 1940s:

The idea of neural networks, which are the foundation of deep learning, was

first introduced in the 1940s by Warren McCulloch and Walter Pitts.

·

Backpropagation Algorithm in the

1970s: In the 1970s, the backpropagation algorithm was

developed, which allowed neural networks to learn from data and improve their

accuracy.

·

Convolutional Neural Networks in the

1980s: In the 1980s, Yann LeCun developed convolutional

neural networks (CNNs), which are a type of neural network specifically

designed for image recognition.

·

Recurrent Neural Networks in the

1990s: In the 1990s, recurrent neural networks (RNNs) were

developed, which are able to process sequential data such as language and

speech.

·

Big Data and GPUs in the 2000s:

In the 2000s, the availability of big data and the development of graphical

processing units (GPUs) made it possible to train deeper and more complex

neural networks.

·

ImageNet and Deep Learning Explosion

in 2010s: In 2012, Alex Krizhevsky and his team used deep

learning techniques to win the ImageNet image classification competition, which

sparked a wave of interest and investment in deep learning.

·

Advancements in Deep Learning

Applications in 2010s: In the 2010s, deep learning was

applied to a wide range of fields, including natural language processing,

speech recognition, autonomous driving, and medical diagnosis.

·

Current Developments and Future

Outlook: Current developments in deep learning include

reinforcement learning, generative models, and explainable AI. The future

outlook for deep learning is promising, with continued advancements in hardware

and algorithms expected to drive further progress.

What is Deep Learning :

·

Deep learning is a subfield of machine

learning.

·

It involves the use of artificial neural

networks to model and solve complex problems.

·

The neural network consists of multiple

layers of interconnected nodes that process information in a hierarchical

manner.

·

Deep learning is particularly effective

for tasks such as image recognition, natural language processing, and speech

recognition.

·

It has achieved breakthroughs in a wide

range of fields, including computer vision, robotics, and natural language

processing.

·

Deep learning is widely regarded as one of

the most promising areas of artificial intelligence research.

Advantages of deep learning:

1. State-of-the-art

performance: Deep learning algorithms have achieved

state-of-the-art performance on many tasks, such as image recognition and

natural language processing.

2. Feature

learning: Deep learning models can learn to automatically

extract features from raw data, reducing the need for manual feature

engineering.

3. Scalability:

Deep learning algorithms can be scaled up to handle large datasets and complex

tasks.

4. Flexibility:

Deep learning models can be applied to a wide range of tasks and can be adapted

to new tasks with transfer learning.

Disadvantages of deep learning:

1. Computationally

expensive: Deep learning algorithms require significant

computational resources, such as GPUs, to train effectively.

2. Large

amounts of data: Deep learning models require large

amounts of data to train effectively, which can be a challenge for some

applications.

3. Overfitting:

Deep learning models can be prone to overfitting on the training data, which

can lead to poor performance on new data.

4. Interpretability:

Deep learning models can be difficult to interpret, which can be a challenge

for understanding how decisions are made.

Learning representation of data

·

Learning representation of data refers to

the process of extracting useful features from raw data that can be used by

machine learning algorithms to make accurate predictions. Here are some key

points that explain learning representation of data:

·

Raw data is often too complex and

high-dimensional to be used directly by machine learning algorithms. Therefore,

it needs to be preprocessed to extract useful features.

·

Traditional feature engineering involves

manually selecting and transforming features based on domain knowledge. This

approach can be time-consuming and may not always lead to the best features.

·

Deep learning algorithms, on the other

hand, can automatically learn representations of data through a process called

feature learning. This involves training a neural network to extract features

from the raw input data.

·

Feature learning can be unsupervised or supervised.

In unsupervised learning, the neural network is trained to learn patterns and

structure in the data without explicit labels. In supervised learning, the

neural network is trained to learn features that are relevant to a specific

prediction task.

·

Deep learning models can learn

hierarchical representations of data, where features at higher layers of the

model capture more abstract and complex concepts. This can lead to better

performance on tasks such as image recognition, speech recognition, and natural

language processing.

·

Learning representations of data can also

be used for transfer learning, where a pre-trained model is used as a starting

point for a new prediction task. This can significantly reduce the amount of

training data required and improve the accuracy of the model.

·

In summary, learning representation of

data involves automatically extracting useful features from raw data using deep

learning algorithms, leading to better performance on prediction tasks and

enabling transfer learning.

Understanding How Deep Learning works in Three

Figures:

First figure:

·

A neural network is composed of layers of

interconnected "neurons"

·

Each neuron in a layer receives input from

the previous layer, processes it, and sends it on to the next layer

·

The input layer receives the raw data, and

the output layer produces the final output of the network

·

The layers in between the input and output

layers are called "hidden layers," and they are used to extract

features and representations of the data

·

Deep neural networks have several hidden

layers, allowing them to learn more complex features and relationships between

inputs and outputs

Second figure:

·

In supervised learning, a dataset with

labeled examples is used to train the network

·

The network is presented with inputs and

the corresponding desired outputs

·

Its weights and biases are adjusted to

minimize the difference between the network's predictions and the desired

outputs

·

This process is repeated for many examples

in the dataset

·

The network gradually learns to make

accurate predictions on new, unseen examples

Third figure:

·

Forward and backward propagation is the

process of passing input data through the layers of the neural network and

computing the output

·

Adjusting the weights of the network in

the backward pass is done by using an optimization algorithm, like Stochastic

Gradient Descent (SGD)

·

The network learns from data by minimizing

the error between the predicted output and the actual output through the

optimization process

Common Architectural Principles of Deep

Learning:

Principles:

·

Use of multiple layers:

Deep networks have multiple layers of neurons, allowing them to learn more

complex and abstract representations of the input data.

·

Non-linear activation function:

Each neuron in a deep network applies a non-linear activation function to the

output of the previous layer. This allows the network to learn non-linear

relationships between inputs and outputs.

·

Gradient-based learning:

Deep networks are trained using a gradient-based optimization algorithm, such

as stochastic gradient descent. This involves computing the gradient of the

loss function with respect to the network parameters and updating them in the

direction of the negative gradient.

·

Backpropagation:

The gradients are computed using backpropagation, which is an efficient

algorithm for computing the gradients of the loss function with respect to each

parameter in the network.

·

Dropout:

Dropout is a regularization technique used in deep networks to prevent

overfitting. It involves randomly dropping out some of the neurons during

training, forcing the remaining neurons to learn more robust representations of

the input data.

·

Batch normalization:

Batch normalization is another regularization technique used in deep networks

to improve training stability and performance. It involves normalizing the

inputs to each layer to have zero mean and unit variance.

·

Convolutional layers:

Convolutional layers are specialized layers used in deep networks for

processing images and other spatial data. They apply a set of learned filters

to the input, producing a set of feature maps.

·

Recurrent layers:

Recurrent layers are specialized layers used in deep networks for processing

sequential data, such as text or speech. They maintain an internal state that

allows them to capture temporal dependencies in the input data.

By using these architectural principles, deep neural

networks can learn complex relationships in data and achieve state-of-the-art

performance in a wide range of applications, from image and speech recognition

to natural language processing and autonomous driving

Architecture Design:

The architecture design of a deep neural network

involves several decisions such as:

·

Number of layers: Deep neural networks

have multiple hidden layers. Deciding on the number of layers requires

balancing the need for more complex representations with the risk of

overfitting.

·

Number of neurons: The number of neurons

in each layer determines the network's capacity to represent more complex features.

This decision also requires balancing the need for more neurons with the risk

of overfitting.

·

Type of activation function: Activation

functions introduce non-linearity into the neural network, enabling it to learn

complex functions. Common activation functions include sigmoid, ReLU, and tanh.

·

Type of layer: Different types of layers

serve different purposes. For example, convolutional layers are used in

computer vision tasks to extract features from images, while recurrent layers

are used in natural language processing tasks to model sequences of data.

·

Strategy for regularization:

Regularization techniques such as dropout and L2 regularization are used to

prevent overfitting and improve generalization performance.

·

Optimization algorithm: Gradient-based

optimization algorithms such as stochastic gradient descent (SGD) are used to

optimize the network's parameters during training.

The design of a deep neural network architecture

involves making informed decisions based on the characteristics of the problem

being solved, the size and complexity of the data, and the available

computational resources.

Applications of Deep Learning:

1. Computer

Vision: Deep learning is widely used for image and video

recognition, object detection, face recognition, and self-driving cars.

2. Natural

Language Processing (NLP): Deep learning is used for language

translation, sentiment analysis, speech recognition, and chatbots.

3. Healthcare:

Deep learning is used for medical image analysis, drug discovery, and disease

diagnosis.

4. Finance:

Deep

learning is used for fraud detection, risk management, and stock price

prediction.

5. Gaming:

Deep learning is used for game playing, character animation, and game AI.

6. Robotics:

Deep learning is used for object recognition, navigation, and control of

robots.

7. Marketing:

Deep learning is used for customer segmentation, personalized marketing, and

recommendation systems.

8. Agriculture:

Deep learning is used for crop yield prediction, disease detection, and

precision farming.

These are just a few examples, and the applications of deep learning are constantly growing and evolving.

Introduction and Use of Popular Industry

Tools for Machine Learning and Deep Learning :

Popular industry tools such as TensorFlow, Keras,

PyTorch, Caffe, and Shogun are used for building and training deep learning

models. These tools provide a user-friendly interface and a high-level

programming language to implement complex deep learning architectures with

ease. Here is a brief introduction to each tool:

1. TensorFlow:

TensorFlow is an open-source software library developed by Google Brain Team

for numerical computation and large-scale machine learning. It offers a variety

of tools and libraries for building and training deep learning models.

2. Keras:

Keras is a high-level neural network API that can run on top of TensorFlow,

Theano, or CNTK. It provides a simple and easy-to-use interface for building

and training deep learning models.

3. PyTorch:

PyTorch is an open-source machine learning library developed by Facebook's AI

research team. It provides a dynamic computational graph that allows for easy

experimentation and debugging.

4. Caffe:

Caffe is a deep learning framework developed by Berkeley AI Research (BAIR). It

is primarily used for image classification tasks and is known for its fast

training speed.

5. Shogun:

Shogun is an open-source machine learning library that supports a variety of

algorithms and data types. It provides a modular and flexible architecture for

building and training deep learning models.

Each tool has its own strengths and weaknesses, and

the choice of tool often depends on the specific application and the user's

preferences and expertise.